The Linux Foundation ONE Summit was held April 29 – May 1, 2024, at the San Jose Convention Center. According to its website, the Linux Foundation provides a proven, repeatable method to scale open-source software project communities via a comprehensive portfolio of support programs. As of 2023, the Linux Foundation had over 1,000 active projects covering numerous technology sectors, including Artificial Intelligence and Machine Learning (AI/ML), cloud, containers and virtualization, and networking and edge. In December 2023, the Open Networking Foundation (ONF) announced that its portfolio of open-source networking projects would become independent projects under the Linux Foundation. This merger has resulted in three new project-directed funds under the Linux Foundation (LF):

LF Broadband: Supports a collection of projects for broadband and passive optical networks, including the Software Defined Networking (SDN)-Enabled Broadband Access (SEBA) reference design and Virtual Optical Line Termination Hardware Abstraction (VOLTHA).

Aether: Supports the portfolio of 5G mobile networking projects, including Aether (private 5G and edge computing), SD-Core (open 5G mobile core), and SD-RAN (open RAN).

P4: Supports work enabling programmability of the networking data plane, including P4 architecture, language, compilers, APIs, applications, and platforms.

Keynotes

The Linux Foundation, Walmart, ZEDEDA, Google Cloud, Deutsche Telekom, Turk Telekom, Verizon, Radisys, Qualcomm, and the Department of Defense gave keynote presentations. Arpit Joshipura, General Manager of Networking, IoT, and Edge for the Linux Foundation, presented the first keynote. Mr. Joshipura highlighted the expansion of Linux Foundation open source projects across Enterprise, User Edge, and Service Provider Edge, including Open Radio Access Network (O-RAN), OpenAirInterface, and SD-RAN. In the Core & Cloud space, recent additions to the Linux Foundation include Extended Berkeley Packet Filters (eBPF), Software for Open Networking in the Cloud (SONiC), DENT, CAMARA, and SD-CORE. Mr. Joshipura highlighted the Open Programmable Infrastructure’s (OPI) announcement of a new lab. The OPI Lab is located in California and is available for use by all members. The lab will focus on provisioning and lifecycle frameworks for Data Processing Units (DPUs) and Infrastructure Processing Units (IPUs). Current Premier Members include Arm, F5, Intel, Keysight, Marvell, NVIDIA, Red Hat, Tencent, and ZTE.

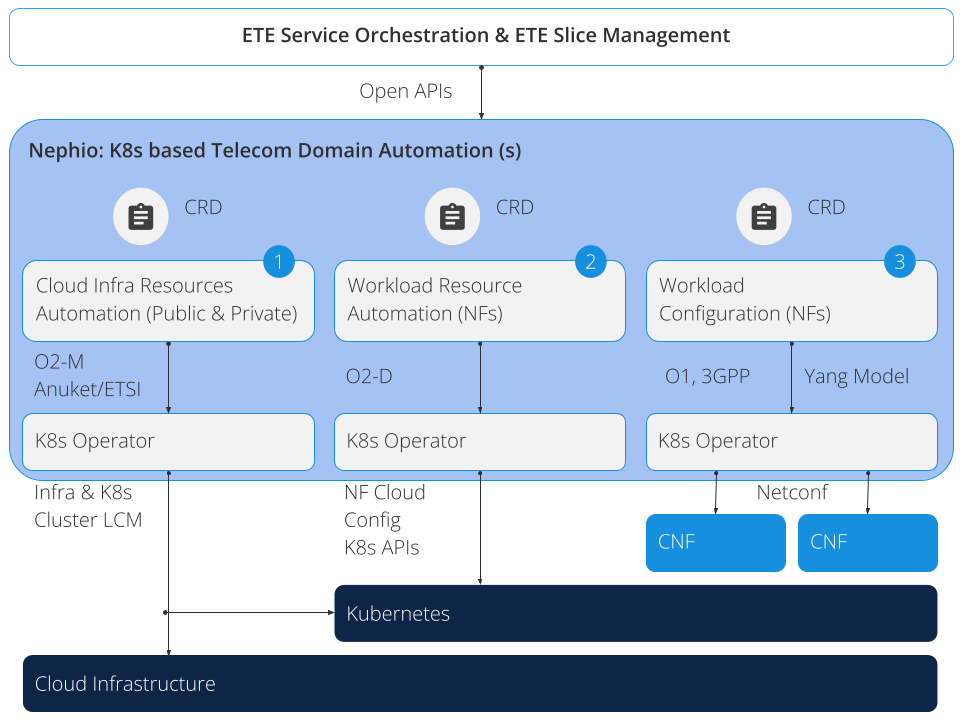

Mr. Joshipura also moderated an expert panel discussion that included Dr. Jan Uddenfeldt, President and CEO jUTechnology, Martin Casado of Andreessen Horowitz, and Dell EMC Fellow Radia Perlman. Dave Temkin, Vice President of Infrastructure Services at Walmart, presented “Leveraging Open-Source for Operational Excellence.” Mr. Temkin outlined the impressive scale of Walmart’s network, including over 100,000 routers and switches and over 450,000 wireless access points. He shared that Walmart is currently deploying Enterprise SONiC at scale in its data centers and that Walmart has seen incredible results in operational excellence. Mr. Temkin also described how Walmart has adopted L3AF to provide lifecycle management of eBPF programs for applications such as Direct Server Return, Walmart’s scalable internal layer 4 load balancing solution. Ankar Jain and Kandan Kathirvel of Google presented “Future of Networks, AI-Driven Network Transformation,” sharing the state of Nephio. Nephio is a Kubernetes-based, intent-driven automation tool for network functions and the underlying infrastructure supporting these functions. The following diagram from the Linux Foundation depicts the Nephio architecture:

Nephio includes open application program interfaces (APIs), enabling expression of intent to drive cloud configuration management. Custom Resource Definition (CRD) models are defined for each layer of the stack: Cloud Infrastructure, Kubernetes, and Cloud Native Functions (CNFs). Ankar and Kandan summarized features from Nephio releases 1 and 2 and outlined the movement toward intent generation and handling through conversational Artificial Intelligence (AI) and AI-driven generation of configurations to support user intent, among other AI-related features.

Alex Jinsung Chui, Senior Vice President at Deutsche Telekom, gave a keynote on Open Source O-RAN. He outlined how the O-RAN Alliance is pursuing its mission of reinventing the RAN, which includes open disaggregation, standards-based compliance, demonstrated interoperability, and implementation neutrality. Choi described that the O-RAN Alliance has 32 operator members, 269 contributing organizations, and 3,582 active experts.

Ahmet Fehti Ayhan gave a presentation titled “Turk Telekom Access Domain Evolution.” He presented Turk Telekom’s Software Defined Networking-Enabled Broadband Access (SEBA) initiative, realizing CAPEX/OPEX savings, shorter operational & business support system integration times, lower error rates, and vendor independence for Turk Telekom.

Alicia Miller, Ecosystem Development Lead at Verizon, presented a keynote on the market potential, value, and key challenges of network APIs such as 5G Edge and ThingSpace. Alicia highlighted a McKinsey & Company study that estimated a $100B to $300B potential opportunity for network APIs.

I was unable to attend the remaining keynotes.

Breakout Presentations

I attended several breakout presentations. One presentation that stood out was “Applying AI/ML Methods to Diagnose Network Issues using Telemetry Data, by Cisco Distinguished Engineer Frank Brockners. Frank posed the following questions:

- “Can we leverage the wealth of information available in a device to have it tell us in natural language what its state is?”

- “Can we treat logs and even feature names in timeseries as natural language and leverage associated methods to interpret them?”

Frank proceeded with an example of root cause analysis of a Kubernetes Flannel log using AI. One might think, “Why don’t I just ask a large language model (LLM) directly what might be wrong in the file?” Quite often, this is not feasible. In many cases, the size of the syslog file to be analyzed is larger than the context window of the LLM used. Frank’s example log file had 4,490 lines and could not fit into GPT-4. Frank articulated two concepts to analyze telemetry data:

- Retrieve the relevant information from the file: Reduce the size of the file in an unsupervised way so that it fits the size of the context window of the LLM without losing the “relevant information.” Relevant information describes the state of the system or entity that generated the file. Essentially, the intent is to retrieve “signals” from the file and eliminate “noise.”

- Use the retrieved “relevant information” as part of a prompt to an LLM: Ask the LLM to diagnose the issue and propose a resolution.

Several methods have been proposed to retrieve “relevant information” from a file. Some methods are purely statistical; others are based on embeddings or a combination of statistical and embeddings-based approaches.

Frank described a simple statistical method for retrieving relevant information, which showed promising results in several cases, both for syslog and time series data, based on token frequencies. Term Frequency, Inverse Document Frequency (TF-IDF) follows the intuition that “Frequently seen rare tokens are important.” TF-IDF is commonly used as a method for keyword extraction in documents.

Frank applied the above concepts to the syslog file and later to the feature names of streaming-telemetry time series files, such that rarely occurring, informative lines/rows bubbled to the top while repetitive, “everything is working” lines filtered to the bottom. Finally, GPT-4 was prompted to create a technical description of the issue and propose a resolution, given relevant information from the syslog file or the streaming telemetry time series file. GPT -4 correctly diagnosed the root cause of Flannel not finding the required IPv4 and IPv6 address configuration information and recommended that these IP addresses be provided to resolve the issue. In the case of the streaming telemetry time series, GPT-4 also correctly diagnosed the issue as a failure of a bi-directional forwarding detection (BFD) session failure. Frank’s presentation highlighted the enormous potential of AI to transform fault management on network devices.

Overall Comments

Based on multiple presentations at recent conferences, I believe there may be an industry movement to leverage AI to replace tier 1 network operations support engineers over time. In this paradigm, tier 1 troubleshooting common problems would be substantially performed using AI, and more complex issues would be escalated to tier 2/3 human engineers. My concern with this paradigm is that it would reduce opportunities for engineers to follow a natural career progression of troubleshooting tier 1 network problems and subsequently growing into tier 2/3 engineers, ultimately reducing the number and quality of tier 2/3 network engineers. My suggestion for network operation centers, and by analogy for security operations centers, is to maintain human operators and engineers in traditional tiers and use AI to empower them, not to replace them.

The 2024 Linux Foundation ONE Summit had several hundred attendees and 15 displays in its vendor showcase. While relatively small, I found the summit highly substantive and informative. The moderate size of this year’s conference lent itself to substantive, unhurried conversations; it was a great opportunity to “pick the brains” of industry experts for multiple Linux Foundation projects. I recommend this conference to anyone who develops and/or uses Linux Foundation tools and wants to expand their professional network.

(Thanks to Frank Brockners, Cisco Distinguished Engineer, for his suggestions and edits)