The Open Compute Project (OCP) Global Summit was held at the San Jose Convention Center October 17 through 19, 2023. The OCP was initiated in 2011 as a collaborative community focused on redesigning hardware technology to efficiently support the growing demands on compute infrastructure. This year’s OCP Global Summit sold out for the first time, drawing over 4,300 attendees. Keynote presentations were given by technology providers Intel, Samsung, Marvell, AMD, Broadcom, and Promersion, and cloud hyperscalers Google, Microsoft, and Meta. The conference included 16 different technology tracks, and the Exposition Hall hosted more than 75 vendors. I attended for two full days October 17-18. As a network engineer, I was drawn to the Linux Foundation’s Software for Open Networking in the Cloud (SONiC) workshop on October 17, and the DENT mini-summit on October 18. I would summarize the overarching question of the conference as follows: How do modern cloud platforms and their suppliers meet the staggering compute, memory, storage, and network requirements posed by Generative AI?

Keynotes

Each keynote speaker highlighted current and future challenges that Generative AI poses to large scale data centers. Dr. Zane Ball of Intel presented the first keynote among cloud technology providers. Dr. Ball highlighted the challenge of power consumption, citing a McKinsey study that global electric power consumption will triple by 2050. Today’s data centers account for roughly 1-1.3% of global electricity consumption; Generative AI will likely drive this percentage far higher in the future. Ball suggested several possibilities to mitigate data center power consumption, estimating that processor level efficiencies could account for 50% power improvements and data center level efficiency improvements, such as liquid cooling, could generate 30% power improvements. JongGyu Park of Samsung spoke to the challenges Generative AI poses to memory. Specifically, processor performance has grown by a factor of > 10,000X since 1980, while memory performance has grown by only approximately 10X over that same time period. Park presented an estimate that electric power consumption due to memory use will grow over 15X from 2020 to 2030, and highlighted Samsung’s Peta-byte Solid State Devices (PBSSDs) as a technology to mitigate this performance gap. Loi Nguyen of Marvell characterized the potential for Generative AI to massively increase data center power consumption. Nguyen said that current data centers consume roughly 32 megawatts on average, but that Generative AI could drive that number to 1 gigawatt, or roughly the output of one nuclear power plant. Nguyen described the race to develop faster optical links, and that optical link throughput has increased 40X, from 40 gigabits/second in 2014 to the current 1.6 terabits/second. He estimated that network components can account for 12% of power usage in large scale AI clusters. Forrest Norrod of AMD spoke to the massive power increase Generative AI demands from processors, and argued that consolidating existing data center workloads on processors optimized for specific workloads could lead to significant reductions in data center space and power requirements. Norrod observed that Moore’s Law, the doubling of the number of transistors on a computer chip every two years, appears to be leveling off. Ram Velaga of Broadcom argued that Ethernet is a viable network technology to meet the demands of Generative AI now and into the future. Velaga also observed that Moore’s Law appears to be leveling off.

Parga Ranganathan of Google presented the first keynote among large scale cloud adopters, enumerating Google’s planet-scale computing solutions from Search in 1998 to Bard in 2023. Ranganathan presented the training computational requirements of Google’s PaLM 2 which, at 1 billion petaFLOPs, are significantly higher than GPT-3 among large language models (LLMs). Reynold D’Sa of Microsoft spoke on the dramatically widening gap between model training computational needs and key infrastructure components, estimating that memory requirements would grow by 1.6X over 2 years, while interconnect throughput would grow by 1.4X over the same period. Dan Rabinovitsj of Meta presented a graph characterizing AI systems as requiring multi-dimensional design that can be optimized against the following axes: compute, memory capacity, memory bandwidth, network latency sensitivity, and network bandwidth. Rabinovitsj went on to describe how different aspects of AI systems strain different axes: LLM training strains the compute axis, LLM inference pushes the memory capacity axis, LLM inference decoding stretches the memory bandwidth axis, and ranking/recommendation training strains the network bandwidth axis. As a communication network engineer, I will make the nitpicking observation that “network capacity” is a more precise term than “network bandwidth” in this context; bandwidth is a radio frequency parameter describing the range of frequencies within a given band used to transmit a signal.

SONIC Workshop

I attended the Software for Open Networking in the Cloud (SONiC) workshop Tuesday afternoon 10/17. SONiC is an open source network operating system based on Linux, and was originally developed by Microsoft and OCP. In 2022, Microsoft ceded oversight of the project to the Linux Foundation. This section summarizes selected presentations. European Telco Orange presented their use of SONiC open switches implemented on EdgeCore DCS 201 hardware as a VLAN aggregation solution for 1 & 10 gigabit/second fiber to the office (FTTO) and fiber to the enterprise (FTTE). Dell presented the use of SONiC on their hardware platforms as an Ethernet fabric for Generative AI workloads. Dell articulated several factors that make AI fabrics unique including high injection and bisection throughput, high sustained traffic levels, low entropy (i.e. uniform) messages, tail latency sensitivity, and latency sensitivity for AI inference. Alibaba presented its field testing of SONiC implemented on the AMD Pensando Data Processing Unit (DPU) smartNIC in a cloud edge gateway use case, connecting edge nodes to the Alibaba cloud and the Internet. Broadcom and Alibaba gave a combined presentation, “Tackling Challenges in FRR Integration and Scaling Up Routing Features,” to improve integration between Free Range Routing (FRR) and SONiC. This presentation emphasized the importance of gathering contributors from both the FRR and SONiC communities to improve white box support for routing features. Initial areas of focus include communications between the kernel, FRR, and SONiC, reducing the memory footprint of the FRR routing table, reducing BGP route loading time, and improving routing convergence time.

DENT Mini-summit

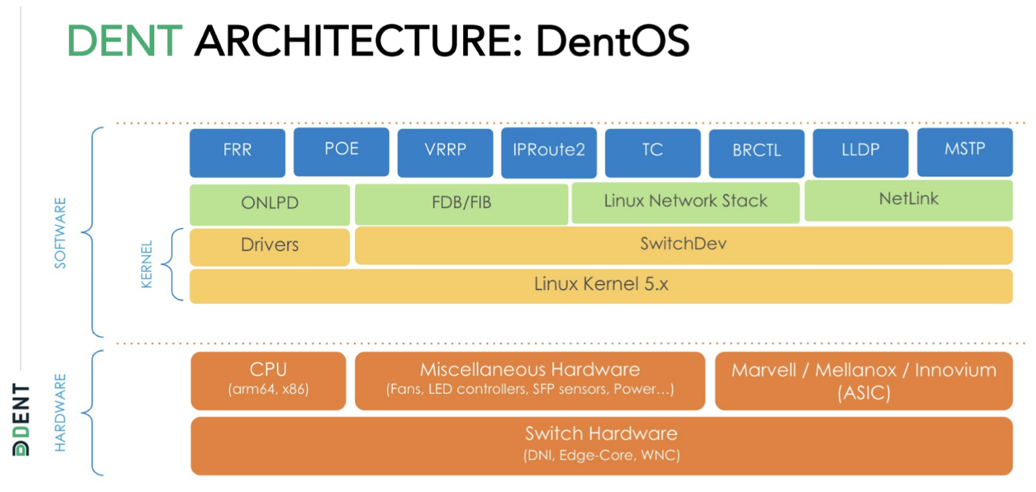

On Wednesday 10/18, I attended the DENT Mini-summit. DENT was initiated as an open source project in 2019 under the Linux Foundation. Charter members included Amazon, EdgeCore, Marvell, and Cumulus Networks, among others. The mission was to develop a stable ethernet switch aimed at enterprise branch offices. The figure below depicts the DENT architecture, a description of the architectural components may be found here. Through the presentations, I learned that SONiC and DENT are two different animals. SONiC has a complex feature set and is typically installed in large data centers, drawing on hardware, software, and support from an ecosystem that includes Arista, Cisco, Juniper, Marvell, Mellanox/NVIDIA, and others. By contrast DENT is relatively simple to implement in edge environments such as branch offices and retail stores.

The DENT mini-summit articulated a list of feature implementation/improvement targets for DENT including Power over Ethernet (PoE), a firmware upgrader, Link Layer Discovery Protocol (LLDP), 802.1X hardening, and Zero-touch Provisioning, among others. Bruno Banelli of Sartura described the benefits of upstreaming DENT to the official Linux kernel source:

- Stability: Rigorous testing reduces errors

- Long-term maintenance: Community support ensures longevity

- Wider adoption: Upstreaming promotes standardization

- Collaboration: Upstreaming encourages developer teamwork

Taras Chornyi of PLVision and Manodipto Ghose of Keysight gave an interesting presentation and demonstration of DENT Switch Abstraction Interface (SAI) testing using Open Traffic Generator (OTG). OTG is an open testing platform, specifying a declarative and vendor neutral API for testing layer 2-7 network devices at virtually any scale. SNAPPI is a Python client-side SDK that implements OTG APIs, and serves as the primary API for traffic testing use cases. OTG test code snippets reminded me of P4 in that the user can define packet fields and field sizes. After defining test packets, the user can specify the data rate in packets per second for one or more flows. During Q&A, I asked Ghose how Keysight uses OTG internally, and he replied that Keysight implements OTG both in software and hardware for various testing use cases.

In my day job as an aerospace industry professional, I use open source tools such as CORE and EMANE to emulate traffic behavior in wireless edge networks facing power and link capacity constraints. Traffic engineering, e.g. the ability to explicitly route traffic around a degraded or disabled link, is a critical capability. OpenFlow and P4 are two open source tools that can be used to realize traffic engineering in wireless edge networks. The industry appears to have moved on from OpenFlow, and Intel has discontinued development on the Tofino platform. At OCP, I attempted to identify an open switch NOS that would support traffic engineering in edge networks; I do not believe SONiC or DENT are optimized to address that use case at this point in time. Open vSwitch may be the best current solution for traffic engineering in constrained wireless edge networks.

Overall, I found the OCP to be an excellent forum for understanding how Generative AI may impact large scale cloud providers and their suppliers. Conference session recordings may be found here.