TL;DR Summary

AvidThink performed a second round of basic throughput and latency tests on AWS Wavelength on Verizon’s network. Verizon provided us with remote access to handsets in Verizon’s test labs in San Francisco, New York City (Bedminster), and Boston (Waltham) (huge thank you to Verizon!). While there was no latency difference between the NorCal EC2 region and SF Wavelength sites, we achieved 8-18ms (20-38%) latency reductions on ICMP pings in the Boston and New York City Wavelength zones. We found that using Wavelength zones had no impact on throughput during our limited testing.

Key takeaways from these extended tests are:

- 5G UW, when you can get it, continues to impress us – getting 1Gbps to 2Gbps download speeds on a mobile phone is fantastic.

- Application developers need to determine their workload placement strategy based on the location of nearby EC2 regions and available Wavelength sites. There are cases where the EC2 region might produce adequate performance without the 20% Wavelength premium.

- To help with the decision, developers will want to track the latency performance for Wavelength sites (per site) over time. We learned that ICMP pings may be prioritized differently on specific carrier networks and will improve our future methodology. Further, as 5G standalone gets rolled out and the RAN topology gets optimized, Wavelength site latencies might drop more.

- It’s important for application developers to understand the difference between latency and available bandwidth and not attribute better application performance to latency when it’s the bandwidth and resultant throughput that matters.

Recap — Hands-on with AWS Wavelength on Verizon – Part 1

If you haven’t yet read the first part of our series, you can find it here. As a quick summary, AvidThink ran tests on AWS Wavelength in the San Francisco Wavelength zone. We benchmarked the network performance from a mobile phone (user equipment or UE) on Verizon’s network when connecting to AWS servers in the SF Wavelength zone, US-West-1 region (NorCal), and US-West-2 region (Oregon). We found that the round-trip times (RTT) and throughput to the US-West-1 region and the SF Wavelength zone were comparable.

Verizon and AWS Offer Assistance

When we ran our first run results by our contacts at AWS and Verizon, they graciously offered their assistance. We received tips and input on the testing methodology and learned more about factors that might have affected our results. Verizon offered us the opportunity to use their remote lab set up in other zones. Given the current pandemic lockdowns we’re living under, AvidThink gladly accepted the offer — it accelerated our ability to check out different Wavelength zones from the comfort of our homes.

Updated learnings and hypotheses

In our conversations with AWS and Verizon, and other experts we consulted after our first round of tests, we learned a couple of things about our test methodology:

- While ICMP pings are a universal way to measure RTT, traffic management on carrier networks might impact ICMP traffic. Nevertheless, the ICMP echo-based method we used is valid for relative measures, especially over many samples. The underlying network would treat pings to Wavelength servers and EC2 region servers equally on average, so a differential in performance would likely apply to application traffic running over TCP or UDP. Regardless, our readers should not take the absolute ICMP echo results as directly indicative of latency.

- Mobile networks will have different UE paths through the RAN and the packet gateways into the Wavelength servers. The route and speed will depend on multiple factors (as we’ve indicated in our first post), such as whether it’s a 5G NSA or SA deployment. We realize that MNO topologies are confidential information and didn’t probe the status of each site. However, you can ascertain yourself based on the traceroute logs from the UE, which we’ve included under the Additional Information section at the end.

- 5G is evolving and improving rapidly, but results from 4G LTE networks will likely be more stable. Nevertheless, the ecosystem has strong incentives to quickly mature 5G technology. In particular, 5G handsets can show different dramatically improved performance after software updates, and it’s essential to have the latest patches. Fortuitously, we updated our Samsung S20 5G UW to the newest software revision before running our initial tests.

Remote Testing in SF, Boston, and NYC

Since the publication of our first post last week, AWS and Verizon have been busy. They’ve added two more Wavelength sites to the original five, for a total of seven: Boston, SF Bay Area, Atlanta, Washington DC, New York City, Miami, and Dallas.

For round #2 of our tests, Verizon provided us with remote access to mobile handsets in their labs in the SF Bay Area, Boston, and New York City. Compared to our initial field tests, these UEs sit in a lab, but they connect to commercial mmWave antennas available to the public near the lab. Aside from a connection to a server via the Android Debug Bridge (ADB), which allows for remote access, these are unmodified retail phones from Samsung and Motorola (the ones we used in testing).

The test locations are as follows:

- NYC – Washington Valley Rd, Bedminster, NJ

- Boston – Sylvan Rd, Waltham, MA

- SF Bay Area – Spear St, San Francisco, CA

Testing Round #2 Methodology

Our AWS set up for round two are as follows:

- A t3.medium instance in the parent region (Oregon for SF, N. Virginia for Boston/NYC)

- A t3.medium instance inside the Wavelength zone

- A t3.medium instance in the NorCal and Ohio regions (used for comparison)

The tests we ran were the identical suite to our round #1 tests:

- A series of pings (50 counts) against each of the three servers

- TCP upload and download tests in iperf3 against each of the three servers (TCP 1 stream upload and TCP 1, 3, 5 concurrent stream downloads)

- UDP upload and download tests in iperf3 against each of the three servers (UDP upload of 10Mbps, 30Mbps, 100Mbps, download of 10Mbps, 30Mbps, 100Mbps, 200Mbps, 500mbps, 1Gbps, 2Gbps) – note that we capped the tests at 2Gbps since we started seeing lost packets

The phones we used in our testing were:

- Motorola Moto Z3 (for 4G LTE in NYC)

- Galaxy S20+5G (5G UW in SF)

- Galaxy S20+5G (5G UW in Boston)

The suite was remotely executed via a script running in a Termux (Android app) shell instance on the phone, with all other apps shut off.

Round #2 Test Results and Analysis

We were able to rerun our tests in the SF Wavelength zone as a comparison point to our field tests, and we’ll note that the latency from the field test and the ones run within Verizon’s labs are similar. That provided us with a sanity check and gave us confidence that we were not getting artificial results from a lab versus a real-world field test (at least not in the SF Bay Area region). We’d still love to do in-the-wild tests and continue to welcome volunteers.

In NYC/Bedminster, unfortunately, the phones had to be temporarily moved from their lab environment, and as a result, the 5G UW phones were not able to get a signal lock. We were able to run the tests on a Moto Z3 with 4G LTE, though. We’ll update the post with 5G UW results as soon as we can rerun the tests.

Here are the tables that summarize our findings across the test runs.

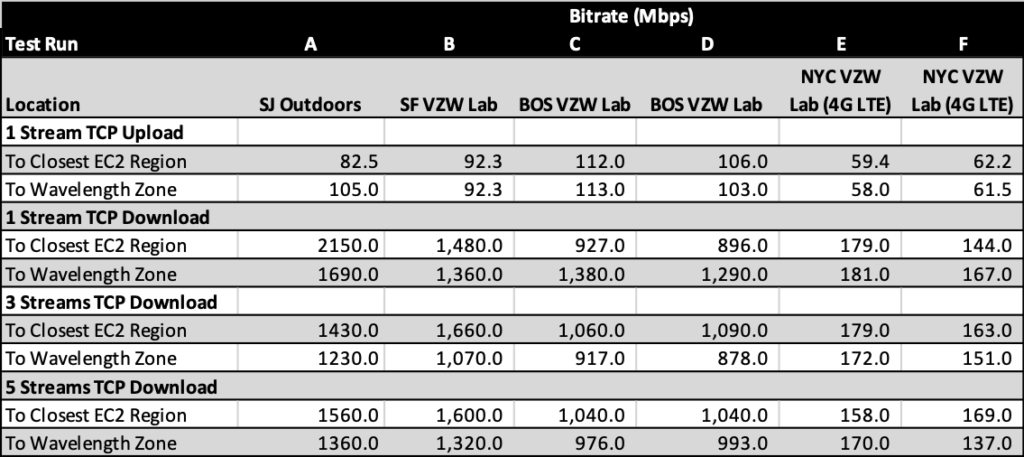

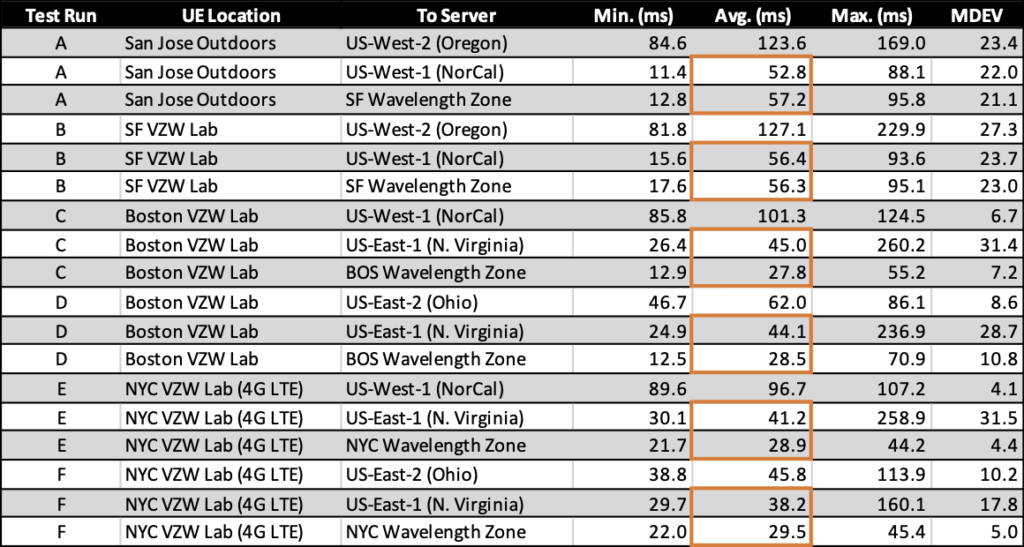

Table 1. Ping Test Summary

Table 2. TCP Test Summary

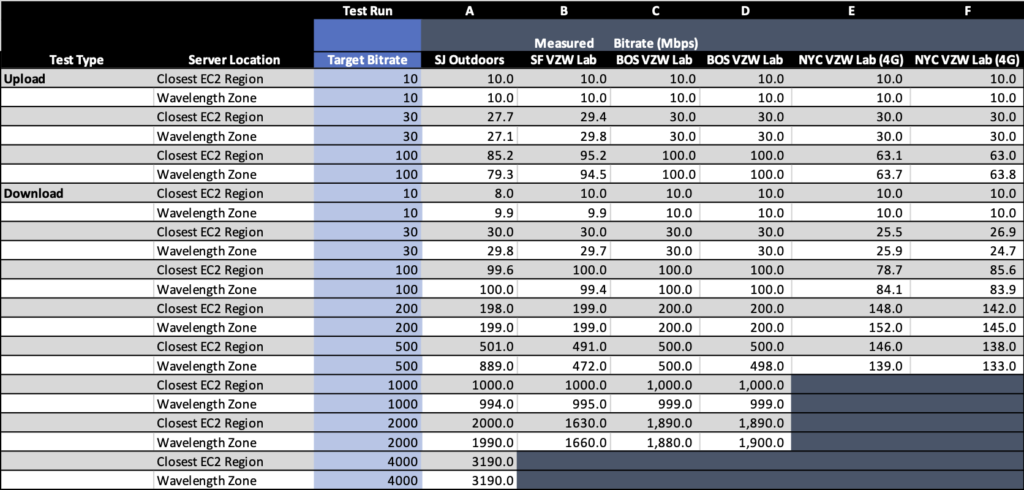

Table 3. UDP Test Summary

Potential Sources of Errors – Updates

The sources of errors in our first post apply to these sets of tests. The one difference between the last test run was that these phones were in a fixed location and not outdoors.

Updated Observations and Hypotheses

With these additional data points, we updated our analysis:

- Location does matter – latency perspective — Where you are relative to an EC2 region matters. For instance, in our original round of tests, we benefited from having a NorCal EC2 region close to us. In that situation, the SF Wavelength site added little performance value — at least in our testing. There could be situations where the Wavelength site might provide added throughput, improved latency, and lower loss if the network links to the NorCal or Oregon EC2 regions get congested. In the NYC and Boston tests, the difference in RTTs comparing Wavelength sites to the parent region was more obvious, ranging from 8-18 ms difference on average (20-38% reduction in latency). In these locations, if that additional 10-20 ms latency impacts application performance or quality of experience, paying that extra 20% premium for a Wavelength EC2 instance might be worthwhile. As the vast majority of metro areas might not be close to an EC2 region (Oregon, Norcal, N. Virginia, Ohio), Wavelength instances might be useful from a latency perspective.

- Location doesn’t matter – throughput perspective — On the throughput front, though, we did not find any consistent difference in performance when transferring data to and from the parent zone versus the Wavelength zone. We did achieve pretty fast speeds on 5G UW — 1Gbps to 2Gbps! The variability from being on the mobile network and likely the vagaries of other competing neighboring workloads in EC2 was more dominant than server location. Again, the caveat is that we’re likely running on a lightly loaded or unloaded backbone link between the mobile core and the EC2 regions in question. And in many MNO networks, they’ve already optimized their paths to the major hyperscale cloud providers, explaining the lack of performance differentials between co-located Wavelength EC2 servers and those in Amazon’s cloud data centers.

- Topology matters — We achieved <30 ms latency on average in Boston and NYC (even on a 4G LTE test) but were stuck around 55-57 ms in SF. To the extent the traceroute outputs were accurate, the number of hops to the Wavelength servers appeared to be roughly the same across all three Wavelength zones (9-12 from UE to the server). We’re not sure why SF’s latencies were higher, but it’s likely due to the network topology and network elements, which we have no visibility. Again, we derived our RTT from ICMP ping times, and the absolute latencies for TCP or UDP traffic may be less due to traffic management on MNO networks (i.e., ICMP might have lower priority).

Given our further experience with running many more tests — we’re veteran novices at Wavelength testing now — we have a few additional observations:

- Cloud-powered edges are convenient — The ability to make minor modifications to our EC2 scripts and immediately bring up servers in multiple Wavelength zones across the country reinforces our view from the first article: edges that are extensions of familiar cloud environments present huge value to developers. We could see how we would deploy EC2 servers in edge locations across the world with a few taps of our keyboard (and chalk up a rapidly growing bill while we’re at it). We cannot overstate the convenience. This observation applies to any familiar cloud environment: GCP, Microsoft Azure, and others.

- Network SLAs tied to the cloud will be powerful — As 5G rollouts continue, we expect MNOs to figure out how to define SLAs that make sense for application developers and businesses. We’re waiting for the day where APIs are available that allow orchestrators and applications to provision appropriate SLAs on the network. At that point, we expect that application developers simply declare what their applications require. As they push out application deployment across edges worldwide, the cloud provider is responsible for ensuring a common abstraction across every major mobile carrier. App developers should not concern themselves with a hodgepodge collection of different APIs or offerings from different carriers. We don’t expect this for a couple more years, given where we are in 5G evolution with Release 16 only locked in recently.

Updated Conclusions and Future Steps

We are grateful to AWS and Verizon’s assistance, without which we would not have been able to so rapidly test two more Wavelength zones and run our tests in San Francisco (on top of our original San Jose-based test runs).

By expanding our tests to additional Wavelength sites, we were able to observe the differences across regions. We’ve learned that cloud regions and Wavelength locations are key factors in edge application deployment decisions. Whether the premium for Wavelength makes sense requires a thoughtful exercise in understanding where the consumers are and the type of cloud and edge resources located close to the consumers.

As for future tests, we’re likely to rerun our 5G UW in Bedminster soon to get additional data points. As we reach other Wavelength sites, we’ll add to our treasure trove of test runs. AvidThink is recruiting volunteers for field tests and comparing those to our lab-run tests. We believe we have Dallas covered, but that leaves five more zones — reach out if you’re interested.

In terms of methodology, we’re likely to test other instance-types (r5) that are less oversubscribed to see if they impact performance. We’re also looking forward to finding and adapting TCP-based tests that reflect latency to get a more accurate measure of true RTTs. More to come. Stay tuned!

As we’ve indicated in the first post, If you have ideas on what further tests we should perform next, or want to chat with us about the edge, drop us a line. You can reach the AvidThink research team at [email protected].

Acknowledgements

For this round of testing, AvidThink would like to thank the AWS and Verizon team for making time to discuss our results and to the Verizon team for the use of their remote lab facilities. All errors, omissions, and mistakes are the sole responsibility of AvidThink.

Disclosure

As an analyst and advisory firm, AvidThink may have past or ongoing engagements with companies covered by our research. This second round was conducted using Verizon lab resources and Verizon-owned phones but without oversight from or interaction with Verizon or AWS team members. The results of the tests have not been modified by AWS or Verizon. AWS server instances in this round of tests continued to be paid for by AvidThink.

Additional Data

If you have interest in seeing some of the more detailed tables, reach out to us (there’s way too many tables to convert to images).

In the meantime, here are some of the traceroute outputs (note, we used the tracepath command from Termux, just as in the first article)

== SF VZW LAB to SF WAVELENGTH SERVER ===

1?: [LOCALHOST] pmtu 1428

1: 225.sub-66-174-219.myvzw.com 12.369ms

1: 225.sub-66-174-219.myvzw.com 13.220ms

2: no reply

3: 146.sub-69-83-165.myvzw.com 59.959ms

4: no reply

5: 234.sub-69-83-160.myvzw.com 117.784ms

6: 63.sub-69-82-83.myvzw.com 20.585ms asymm 9

7: 136.sub-69-83-161.myvzw.com 89.504ms

8: 10.210.188.96 21.995ms

9: 155.146.19.60 79.107ms reached

Resume: pmtu 1428 hops 9 back 11

=== SF VZW LAB to N. CAL REGION ===

1?: [LOCALHOST] pmtu 1428

1: 225.sub-66-174-219.myvzw.com 44.797ms

1: 225.sub-66-174-219.myvzw.com 21.061ms

2: no reply

3: 146.sub-69-83-165.myvzw.com 85.366ms

4: no reply

5: 234.sub-69-83-160.myvzw.com 116.489ms

6: 132.sub-69-83-161.myvzw.com 18.232ms

7: 0.csi1.SNVACANX-MSE01-BB-SU1.ALTER.NET 20.034ms asymm 10

8: no reply

9: no reply

10: 0.ae27.GW7.SJC7.ALTER.NET 116.814ms

11: 204.148.55.30 19.808ms asymm 10

12: no reply

13: 52.93.47.46 104.105ms asymm 11

14: 52.93.47.37 115.653ms

15: 54.240.242.63 150.817ms asymm 12

16: 52.93.47.98 19.863ms asymm 11

17: no reply

18: no reply

19: no reply

20: no reply

21: no reply

22: no reply

23: ec2-54-177-222-229.us-west-1.compute.amazonaws.com 92.305ms reached

Resume: pmtu 1428 hops 23 back 18

=== NYC VZW LAB to NYC WAVELENGTH SERVER ===

1?: [LOCALHOST] pmtu 1428

1: 241.sub-66-174-22.myvzw.com 37.978ms

1: 241.sub-66-174-22.myvzw.com 31.271ms

2: no reply

3: no reply

4: 18.sub-69-83-7.myvzw.com 36.995ms

5: no reply

6: 88.sub-69-83-0.myvzw.com 45.690ms asymm 5

7: 21.sub-69-82-3.myvzw.com 30.582ms asymm 10

8: 112.sub-69-83-0.myvzw.com 33.393ms

9: 10.208.134.168 27.924ms

10: 155.146.64.234 26.910ms reached

Resume: pmtu 1428 hops 10 back 12

=== NYC VZW LAB to N VIRGINIA REGION ===

1?: [LOCALHOST] pmtu 1428

1: 241.sub-66-174-22.myvzw.com 35.938ms

1: 241.sub-66-174-22.myvzw.com 46.340ms

2: no reply

3: no reply

4: 18.sub-69-83-7.myvzw.com 34.054ms

5: no reply

6: 88.sub-69-83-0.myvzw.com 48.545ms asymm 5

7: 88.sub-69-83-0.myvzw.com 29.668ms asymm 9

8: 104.sub-69-83-0.myvzw.com 23.283ms

9: 0.csi1.BBTPNJDA-MSE01-BB-SU1.ALTER.NET 34.053ms asymm 13

10: no reply

11: no reply

12: 0.ae16.GW14.IAD8.ALTER.NET 45.141ms asymm 11

13: 204.148.170.66 32.616ms asymm 11

14: no reply

15: no reply

16: 52.93.29.88 41.819ms asymm 27

17: no reply

18: no reply

19: no reply

20: no reply

21: no reply

22: ec2-3-230-148-227.compute-1.amazonaws.com 47.657ms reached

Resume: pmtu 1428 hops 22 back 28

=== BOS VZW LAB to BOS WAVELENGTH SERVER ===

1?: [LOCALHOST] pmtu 1428

1: 203.sub-66-174-20.myvzw.com 24.596ms

1: 203.sub-66-174-20.myvzw.com 16.024ms

2: no reply

3: no reply

4: 130.sub-69-83-13.myvzw.com 28.236ms asymm 3

5: no reply

6: 10.211.63.32 34.788ms asymm 7

7: 10.211.63.36 17.978ms asymm 8

8: 155.146.4.119 15.990ms reached

Resume: pmtu 1428 hops 8 back 11

=== BOS VZW LAB to N VIRGINIA REGION ===

1?: [LOCALHOST] pmtu 1428

1: 203.sub-66-174-20.myvzw.com 13.980ms

1: 203.sub-66-174-20.myvzw.com 22.438ms

2: no reply

3: no reply

4: 130.sub-69-83-13.myvzw.com 68.254ms asymm 3

5: no reply

6: 246.sub-69-83-2.myvzw.com 34.049ms asymm 5

7: 246.sub-69-83-2.myvzw.com 21.511ms asymm 9

8: 101.sub-66-174-17.myvzw.com 14.815ms asymm 7

9: 157.130.93.249 47.059ms asymm 11

10: 0.ae15.GW14.IAD8.ALTER.NET 31.401ms asymm 11

11: 204.148.170.66 29.357ms

12: no reply

13: no reply

14: 52.93.28.216 67.367ms asymm 29

15: no reply

16: no reply

17: no reply

18: no reply

19: no reply

20: ec2-54-166-243-199.compute-1.amazonaws.com 76.332ms reached

Resume: pmtu 1428 hops 20 back 29